AI crawlers like GPTBot and ClaudeBot are overwhelming web sites with aggressive site visitors spikes—one consumer reported 30TB of bandwidth consumed in a month. These bots pressure shared internet hosting environments, inflicting slowdowns that harm web optimization and consumer expertise. Not like conventional search crawlers, AI bots request massive batches of pages in brief bursts with out following bandwidth-saving tips. Devoted servers present important management by means of charge limiting, IP filtering, and customized caching, defending your website’s efficiency in opposition to this rising pattern.

No, you’re not imagining issues.

For those who’ve not too long ago checked your server logs or analytics dashboard and noticed unusual consumer brokers like GPTBot or ClaudeBot, you’re seeing the impression of a brand new wave of holiday makers: AI and LLM crawlers.

These bots are a part of large-scale efforts by AI firms to coach and refine their massive language fashions. Not like conventional search engine crawlers that index content material methodically, AI crawlers function a bit extra… aggressively.

To place it into perspective, OpenAI’s GPTBot generated 569 million requests in a single month on Vercel’s community. For web sites on shared internet hosting plans, that type of automated site visitors may cause actual efficiency complications.

This text addresses the #1 query from internet hosting and sysadmin boards: “Why is my website immediately sluggish or utilizing a lot bandwidth with out extra actual customers?” You’ll additionally learn the way switching to a devoted server can provide you again the management, stability, and pace you want.

Understanding AI and LLM Crawlers and Their Influence

What are AI Crawlers?

AI crawlers, often known as LLM crawlers, are automated bots designed to extract massive volumes of content material from web sites to feed synthetic intelligence programs.

These crawlers are operated by main tech firms and analysis teams engaged on generative AI instruments. Essentially the most lively and recognizable AI crawlers embody:

- GPTBot (OpenAI)

- ClaudeBot (Anthropic)

- PerplexityBot (Perplexity AI)

- Google-Prolonged (Google)

- Amazonbot (Amazon)

- CCBot (Widespread Crawl)

- Yeti (Naver’s AI crawler)

- Bytespider (Bytedance, TikTok’s dad or mum firm)

New crawlers are rising ceaselessly as extra firms enter the LLM area. This fast development has launched a brand new class of site visitors that behaves in another way from standard net bots.

How AI Crawlers Differ from Conventional Search Bots

Conventional bots like Googlebot or Bingbot crawl web sites in an orderly, rules-abiding trend. They index your content material to show in search outcomes and usually throttle requests to keep away from overwhelming your server.

AI crawlers, as we identified earlier, are rather more aggressive. They:

- Request massive batches of pages in brief bursts

- Disregard crawl delays or bandwidth-saving tips

- Extract full web page textual content and typically try to observe dynamic hyperlinks or scripts

- Function at scale, usually scanning hundreds of internet sites in a single crawl cycle

One Reddit consumer reported that GPTBot alone consumed 30TB of bandwidth knowledge from their website in only one month, with none clear enterprise profit to the positioning proprietor.

Picture credit score: Reddit consumer, Isocrates Noviomagi

Incidents like this have gotten extra frequent, particularly amongst web sites with wealthy textual content material like blogs, documentation pages, or boards.

In case your bandwidth utilization is growing however human site visitors isn’t, AI crawlers could also be guilty.

Why Shared Internet hosting Environments Wrestle

Once you’re on a shared server, your website efficiency isn’t simply affected by your guests—it’s additionally influenced by what everybody else on the server is coping with. And these days, what they’re all coping with is a silent surge in “faux” site visitors that eats up CPU, reminiscence, and runs up your bandwidth invoice within the background.

This units the stage for an even bigger dialogue: how can web site homeowners shield efficiency within the face of rising AI site visitors?

The Hidden Prices of AI Crawler Visitors on Shared Internet hosting

Shared internet hosting is ideal in case your precedence is affordability and ease, nevertheless it comes with trade-offs.

When a number of web sites reside on the identical server, they share finite sources like CPU, RAM, bandwidth, and disk I/O. This setup works nicely when site visitors stays predictable, however AI crawlers don’t play by these guidelines. As an alternative, they have an inclination to generate intense and sudden spikes in site visitors.

A recurring difficulty in shared internet hosting is what’s referred to as “noisy neighbor syndrome.” One website experiencing excessive site visitors or useful resource consumption finally ends up affecting everybody else. Within the case of AI crawlers, it solely takes one website to draw consideration from these bots to destabilize efficiency throughout the server.

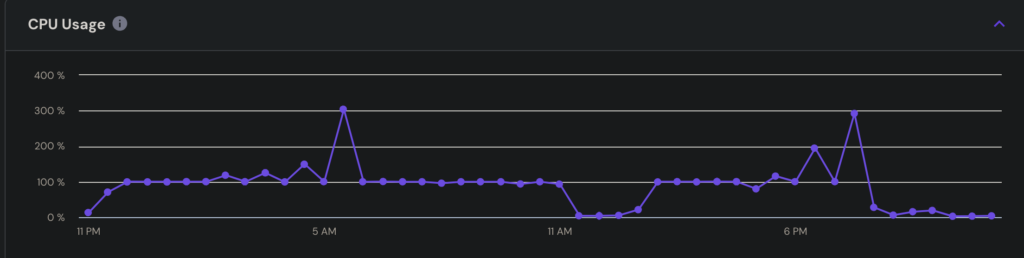

This isn’t theoretical. System directors have reported CPU utilization spiking to 300% throughout peak crawler exercise, even on optimized servers.

Picture supply: Github consumer, ‘galacoder’

On shared infrastructure, these spikes can result in throttling, momentary outages, or delayed web page masses for each buyer hosted on that server.

And, as a result of this site visitors is machine-generated, it doesn’t convert, it doesn’t have interaction; and in internet advertising phrases, it’s marked GIVT (Basic Invalid Visitors).

And if efficiency points aren’t sufficient, since AI crawler site visitors impacts website pace, it invariably impacts your technical web optimization.

Google has made it clear: slow-loading pages harm your rankings. Core Net Vitals like Largest Contentful Paint (LCP) and Time to First Byte (TTFB) at the moment are direct rating indicators. If crawler site visitors delays your load instances, it may chip away at your visibility in natural search, costing you clicks, clients, and conversions.

And since many of those crawlers present no web optimization profit in return, their impression can really feel like a double loss: degraded efficiency and no upside.

Devoted Servers: Your Protect Towards AI Crawler Overload

Not like shared internet hosting, devoted servers isolate your website’s sources, that means no neighbors, no competitors for bandwidth, and no slowdown from another person’s site visitors.

A devoted server offers you the keys to your infrastructure. Meaning you may:

- Modify server-level caching insurance policies

- Advantageous-tune firewall guidelines and entry management lists

- Implement customized scripts for site visitors shaping or bot mitigation

- Arrange superior logging and alerting to catch crawler surges in actual time

This degree of management isn’t accessible on shared internet hosting and even most VPS environments. When AI bots spike useful resource utilization, having the ability to proactively defend your stack is important. With devoted infrastructure, you may soak up site visitors spikes with out dropping efficiency. Your backend programs—checkout pages, types, login flows—proceed to operate as anticipated, even underneath load.

That type of reliability interprets on to buyer belief. When each click on counts, each second saved issues.

Devoted Internet hosting Pays for Itself

It’s true: devoted internet hosting prices extra up entrance than shared or VPS plans. However while you account for the hidden prices of crawler-related slowdowns—misplaced site visitors, web optimization drops, assist tickets, and missed conversions—the equation begins to shift.

A devoted server doesn’t simply get rid of the signs; it removes the basis trigger. For web sites producing income or dealing with delicate interactions, the steadiness and management it provides usually pays for itself inside months.

Controlling AI Crawlers With Robots.txt and LLMS.txt

In case your website is experiencing sudden slowdowns or useful resource drain, limiting bot entry could also be one of the vital efficient methods to revive stability, with out compromising your consumer expertise.

Robots.txt Nonetheless Issues

Most AI crawlers from main suppliers like OpenAI and Anthropic now respect robots.txt directives. By setting clear disallow guidelines on this file, you may instruct compliant bots to not crawl your website.

It’s a light-weight solution to scale back undesirable site visitors while not having to put in firewalls or write customized scripts. And plenty of firms already use it for managing web optimization crawlers, so extending it to AI bots is a pure subsequent step.

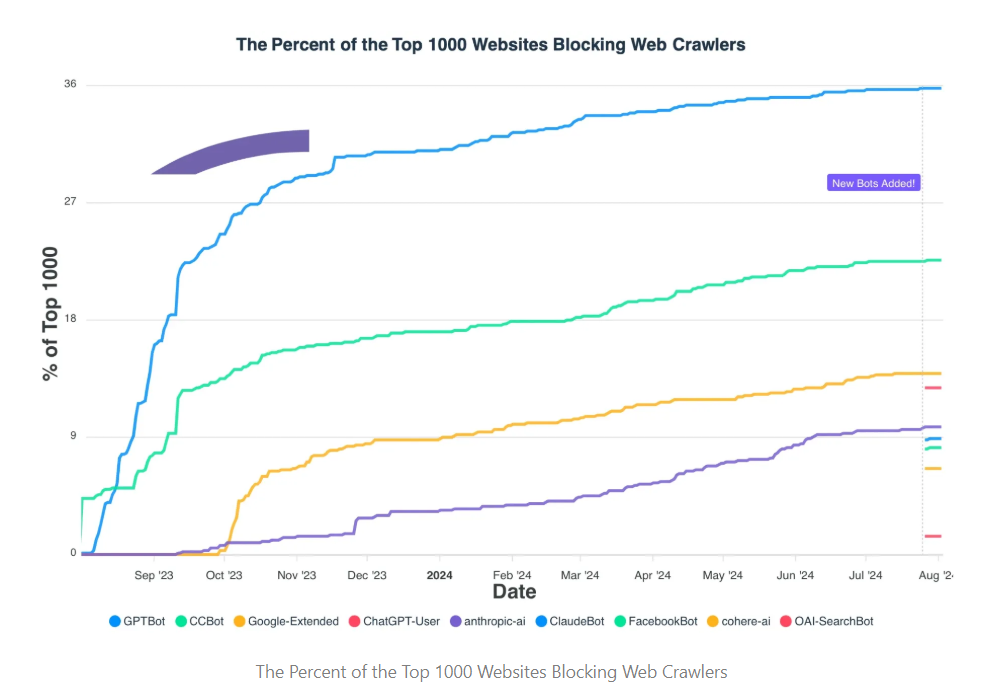

By August 2024, over 35% of the highest 1000 web sites on the planet had blocked GPTBot utilizing robots.txt. It is a signal that website homeowners are taking again management over how their content material is accessed.

Picture supply: PPC LAND

A New File for a New Problem: LLMS.txt

Along with robots.txt, a more moderen commonplace referred to as llms.txt is beginning to acquire consideration. Whereas nonetheless in its early adoption part, it offers website homeowners one other choice to outline how (or whether or not) their content material can be utilized in massive language mannequin coaching.

Not like robots.txt, which is concentrated on crawl habits, llms.txt helps make clear permissions associated particularly to AI knowledge utilization. It’s a refined however necessary shift as AI growth more and more intersects with net publishing.

Utilizing each information collectively offers you a fuller toolkit for managing crawler site visitors, particularly as new bots seem and coaching fashions evolve.

Under is a feature-by-feature comparability of robots.txt and llms.txt:

| Function | robots.txt | llms.txt |

| Major Function | Controls how crawlers index and entry net content material | Informs AI crawlers about content material utilization for LLM coaching |

| Supported Crawlers | Search engines like google and yahoo and general-purpose bots (Googlebot, Bingbot, GPTBot, and so forth.) | AI-specific bots (e.g. GPTBot, ClaudeBot) |

| Normal Standing | Lengthy-established and broadly supported | Rising and unofficial, not but a common commonplace |

| Compliance Kind | Voluntary (however revered by main crawlers) | Voluntary and much more restricted in adoption |

| File Location | Root listing of the web site (yourdomain.com/robots.txt) | Root listing of the web site (yourdomain.com/llms.txt) |

| Granularity | Permits granular management over directories and URLs | Goals to precise intent round coaching utilization and coverage |

| web optimization Influence | Can straight impression search visibility if misconfigured | No direct web optimization impression; targeted on AI content material coaching |

Select the Proper Technique for Your Enterprise

Not each web site wants to dam AI bots solely. For some, elevated visibility in AI-generated solutions may very well be useful. For others—particularly these involved with content material possession, model voice, or server load—limiting or totally blocking AI crawlers could be the smarter transfer.

For those who’re uncertain, begin by reviewing your server logs or analytics platform to see which bots are visiting and the way ceaselessly. From there, you may regulate your method primarily based on efficiency impression and enterprise targets.

Study extra about selecting the best enterprise internet hosting resolution for you.

Technical Methods That Require Devoted Server Entry

Devoted servers unlock the technical flexibility wanted to not simply reply to crawler exercise, however get forward of it.

- Implementing Charge Limits

One of the crucial efficient methods to regulate server load is by rate-limiting bot site visitors. This entails setting limits on what number of requests could be made in a given timeframe, which protects your website from being overwhelmed by sudden spikes.

However to do that correctly, you want server-level entry, and that’s not one thing shared environments usually present. On a devoted server, charge limiting could be custom-made to fit your enterprise mannequin, consumer base, and bot habits patterns.

- Blocking and Filtering by IP

One other highly effective device is IP filtering. You’ll be able to permit or deny site visitors from particular IP ranges recognized to be related to aggressive bots. With superior firewall guidelines, you may section site visitors, restrict entry to delicate elements of your website, and even redirect undesirable bots elsewhere.

Once more, this degree of filtering is dependent upon having full management of your internet hosting atmosphere—one thing shared internet hosting can’t provide.

- Smarter Caching for Smarter Bots

Most AI crawlers request the identical high-value pages repeatedly. With a devoted server, you may arrange caching guidelines particularly designed to deal with bot site visitors. That may imply serving static variations of your most-requested pages or creating separate caching logic for recognized consumer brokers.

This reduces load in your dynamic backend and retains your website quick for actual customers.

- Load Balancing and Scaling

When crawler site visitors surges, load balancing ensures site visitors is distributed evenly throughout your infrastructure. This sort of resolution is barely accessible by means of devoted or cloud-based setups. It’s important for companies that may’t afford downtime or delays—particularly throughout peak hours or product launches.

In case your internet hosting plan can’t scale on demand, you’re not protected in opposition to sudden bursts of site visitors. Devoted infrastructure offers you that peace of thoughts.

Future-Proofing Your Web site With Scalable Infrastructure

AI crawler site visitors isn’t a passing pattern. It’s rising, and quick. As extra firms launch LLM-powered instruments, the demand for coaching knowledge will hold growing. This implies extra crawlers, extra requests, and extra pressure in your infrastructure.

Picture supply: Sam Achek on Medium

Builders and IT groups are already planning for this shift. In additional than 60 discussion board discussions, one query retains displaying up:

“How ought to we adapt our infrastructure in gentle of AI?”

The reply usually comes down to at least one phrase: flexibility.

Devoted Servers Give You Room to Develop

Not like shared internet hosting, devoted servers aren’t restricted by inflexible configurations or site visitors ceilings. You management the atmosphere. Meaning you may take a look at new bot mitigation methods, introduce extra superior caching layers, and scale your efficiency infrastructure while not having emigrate platforms.

If an AI crawler’s habits modifications subsequent quarter, your server setup can adapt instantly.

Scaling Past Shared Internet hosting Limits

With shared internet hosting, you’re restricted by the wants of the bottom frequent denominator. You’ll be able to’t broaden RAM, add extra CPUs, or configure load balancers to soak up site visitors surges. That makes scaling painful and sometimes disruptive.

Devoted servers, alternatively, provide you with entry to scaling choices that develop with your online business. Whether or not which means including extra sources, integrating content material supply networks, or splitting site visitors between machines, the infrastructure can develop while you want it to.

Assume Lengthy-Time period

AI site visitors isn’t only a technical problem. It’s a enterprise one. Each slowdown, timeout, or missed customer has a price. Investing in scalable infrastructure immediately helps you keep away from efficiency points tomorrow.

A robust internet hosting basis helps you to evolve with expertise as an alternative of reacting to it. And when the subsequent wave of AI instruments hits, you’ll be prepared.

web optimization Implications of AI Crawler Administration

“Will blocking bots harm your rankings?” This query has been requested over 120 instances in discussions throughout Reddit, WebmasterWorld, and area of interest advertising boards:

At InMotion Internet hosting, our brief reply? Not essentially.

AI crawlers like GPTBot and ClaudeBot are usually not the identical as Googlebot. They don’t affect your search rankings. They’re not indexing your pages for visibility. As an alternative, they’re gathering knowledge to coach AI fashions.

Blocking them gained’t take away your content material from Google Search. However it may enhance efficiency, particularly if these bots are slowing your website down.

Concentrate on Velocity, Not Simply Visibility

Google has confirmed that website pace performs a job in search efficiency. In case your pages take too lengthy to load, your rankings can drop. This holds no matter whether or not the slowdown comes from human site visitors, server points, or AI bots.

Heavy crawler site visitors can push your response instances previous acceptable limits. That impacts your Core Net Vitals scores. And people scores at the moment are key indicators in Google’s rating algorithm.

Picture supply: Google PageSpeed Insights

In case your server is busy responding to AI crawlers, your actual customers—and Googlebot—may be left ready.

Steadiness Is Key

You don’t have to decide on between visibility and efficiency. Instruments like robots.txt allow you to permit search bots whereas limiting or blocking AI crawlers that don’t add worth.

Begin by reviewing your site visitors. If AI bots are inflicting slowdowns or errors, take motion. Enhancing website pace helps each your customers and your web optimization.

Migrating From Shared Internet hosting to a Devoted Server: The Course of

What does it take to make the shift from shared internet hosting to a devoted server? Usually, that is what the method entails:

- Operating a efficiency benchmark on the present shared atmosphere

- Scheduling the migration throughout off-peak hours to keep away from buyer impression

- Copying website information, databases, and SSL certificates to the brand new server

- Updating DNS settings and testing the brand new atmosphere

- Blocking AI crawlers by way of robots.txt and fine-tuning server-level caching

After all, with InMotion Internet hosting’s best-in-class assist group, all that is no trouble in any respect.

Conclusion

AI crawler site visitors isn’t slowing down.

Devoted internet hosting provides a dependable resolution for companies experiencing unexplained slowdowns, rising server prices, or efficiency points tied to automated site visitors. It offers you full management over server sources, bot administration, and infrastructure scalability.

For those who’re uncertain whether or not your present internet hosting can sustain, overview your server logs. Search for spikes in bandwidth utilization, unexplained slowdowns, or unfamiliar consumer brokers. If these indicators are current, it might be time to improve.

Defend your website pace from AI crawler site visitors with a devoted server resolution that offers you the facility and management to handle bots with out sacrificing efficiency.